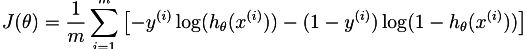

I just started taking Andrew Ng's course on Machine Learning on Coursera. The topic of the third week is logistic regression, so I am trying to implement the following cost function.

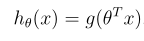

The hypothesis is defined as:

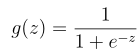

where g is the sigmoid function:

This is how my function looks at the moment:

function [J, grad] = costFunction(theta, X, y)

m = length(y); % number of training examples

S = 0;

J = 0;

for i=1:m

Yi = y(i);

Xi = X(i,:);

H = sigmoid(transpose(theta).*Xi);

S = S + ((-Yi)*log(H)-((1-Yi)*log(1-H)));

end

J = S/m;

end

Given the following values

X = [magic(3) ; magic(3)];

y = [1 0 1 0 1 0]';

[j g] = costFunction([0 1 0]', X, y)

j returns 0.6931 2.6067 0.6931 even though the result should be j = 2.6067. I am assuming that there is a problem with Xi, but I just can't see the error.

I would be very thankful if someone could point me to the right direction.

You are supposed to apply the sigmoid function to the dot product of your parameter vector (theta) and input vector (Xi, which in this case is a row vector). So, you should change

H = sigmoid(transpose(theta).*Xi);

to

H = sigmoid(theta' * Xi'); % or sigmoid(Xi * theta)

Of course, you need to make sure that the bias input 1 is added to your inputs (a row of 1s to X).

Next, think about how you can vectorize this entire operation so that it can be written without any loops. That way it would be considerably faster.

If you love us? You can donate to us via Paypal or buy me a coffee so we can maintain and grow! Thank you!

Donate Us With