Given a dictionary of words and an initial character. find the longest possible word in the dictionary by successively adding a character to the word. At any given instance the word should be valid word in the dictionary.

ex : a -> at -> cat -> cart -> chart ....

If you want to do this once, I'd do the following (generalized to the problem of starting with a full word):

Take your entire dictionary and throw away anything that does not have a superset of the characters in your target word (let's say it has length m). Then bin the remaining words by length. For each word of length m+1, try dropping each letter and see if that yields your desired word. If not, toss it. Then check each word of length m+2 against the valid set of length m+1, dropping any that can't be reduced. Keep going until you find an empty set; the last thing(s) you found will be the longest.

If you want to make this fast to look up, I'd build a suffix-tree-like data structure.

Group all words by length. For each word of length 2, place each of its two characters in a "subword" set, and add that word to each of the characters' "superword" sets. Now you've got a link between all valid words of length 2 and all characters. Do the same with words of length 3 and valid words of length 2. Now you can start anywhere in this hierarchy and do a breadth-first search to find the deepest branch.

Edit: the speed of this solution will depend greatly on the structure of the language, but if we decide to build everything using sets with log(n) performance for all operations (i.e. we use red-black trees or the like), and we have N(m) words of length m, then to form the link between words of length m+1 and m will approximately (m+1)*m*N(m+1)*log(N(m)) time (taking into account that string compares take linear time in the length of the string). Since we have to do this for all word lengths, the runtime for building the full data structure will be something on the order of

(typical word length)^3 * (dictionary length) * log (dictionary length / word length)

(The initial binning into words of a certain length will take linear time so can be neglected; the actual formula for runtime is complicated because it depends on the distribution of word lengths; for the case where you're doing it from a single word it's even more complicated because it depends on the expected number of longer words that have shorter subwords.)

The brute force approach would be to try adding letters to each available index using a depth-first search.

So, starting with 'a', there are two places you can add a new letter. In front or behind the 'a', represented by dots below.

.a.

If you add a 't', there are now three positions.

.a.t.

You can try adding all 26 letters to each available position. The dictionary in this case can be a simple hashtable. If you add a 'z' in the middle, you get 'azt' which would not be in the hashtable so you don't continue down that path in the search.

Edit: Nick Johnson's graph made me curious what a graph of all maximal paths would look like. It's a large (1.6 MB) image here:

http://www.michaelfogleman.com/static/images/word_graph.png

Edit: Here's a Python implementation. The brute-force approach actually runs in a reasonable amount of time (a few seconds, depending on the starting letter).

import heapq

letters = 'abcdefghijklmnopqrstuvwxyz'

def search(words, word, path):

path.append(word)

yield tuple(path)

for i in xrange(len(word)+1):

before, after = word[:i], word[i:]

for c in letters:

new_word = '%s%s%s' % (before, c, after)

if new_word in words:

for new_path in search(words, new_word, path):

yield new_path

path.pop()

def load(path):

result = set()

with open(path, 'r') as f:

for line in f:

word = line.lower().strip()

result.add(word)

return result

def find_top(paths, n):

gen = ((len(x), x) for x in paths)

return heapq.nlargest(n, gen)

if __name__ == '__main__':

words = load('TWL06.txt')

gen = search(words, 'b', [])

top = find_top(gen, 10)

for path in top:

print path

Of course, there will be a lot of ties in the answer. This will print the top N results, measured by length of the final word.

Output for starting letter 'a', using the TWL06 Scrabble dictionary.

(10, ('a', 'ta', 'tap', 'tape', 'taped', 'tamped', 'stamped', 'stampede', 'stampedes', 'stampeders'))

(10, ('a', 'ta', 'tap', 'tape', 'taped', 'tamped', 'stamped', 'stampede', 'stampeder', 'stampeders'))

(10, ('a', 'ta', 'tan', 'tang', 'stang', 'strang', 'strange', 'strangle', 'strangles', 'stranglers'))

(10, ('a', 'ta', 'tan', 'tang', 'stang', 'strang', 'strange', 'strangle', 'strangler', 'stranglers'))

(10, ('a', 'ta', 'tan', 'tang', 'stang', 'strang', 'strange', 'stranges', 'strangles', 'stranglers'))

(10, ('a', 'ta', 'tan', 'tang', 'stang', 'strang', 'strange', 'stranges', 'strangers', 'stranglers'))

(10, ('a', 'ta', 'tan', 'tang', 'stang', 'strang', 'strange', 'stranges', 'strangers', 'estrangers'))

(10, ('a', 'ta', 'tan', 'tang', 'stang', 'strang', 'strange', 'stranges', 'estranges', 'estrangers'))

(10, ('a', 'ta', 'tan', 'tang', 'stang', 'strang', 'strange', 'stranger', 'strangler', 'stranglers'))

(10, ('a', 'ta', 'tan', 'tang', 'stang', 'strang', 'strange', 'stranger', 'strangers', 'stranglers'))

And here are the results for each starting letter. Of course, an exception is made that the single starting letter doesn't have to be in the dictionary. Just some 2-letter word that can be formed with it.

(10, ('a', 'ta', 'tap', 'tape', 'taped', 'tamped', 'stamped', 'stampede', 'stampedes', 'stampeders'))

(9, ('b', 'bo', 'bos', 'bods', 'bodes', 'bodies', 'boodies', 'bloodies', 'bloodiest'))

(1, ('c',))

(10, ('d', 'od', 'cod', 'coed', 'coped', 'comped', 'compted', 'competed', 'completed', 'complected'))

(10, ('e', 're', 'rue', 'ruse', 'ruses', 'rouses', 'arouses', 'carouses', 'carousels', 'carrousels'))

(9, ('f', 'fe', 'foe', 'fore', 'forge', 'forges', 'forgoes', 'forgoers', 'foregoers'))

(10, ('g', 'ag', 'tag', 'tang', 'stang', 'strang', 'strange', 'strangle', 'strangles', 'stranglers'))

(9, ('h', 'sh', 'she', 'shes', 'ashes', 'sashes', 'slashes', 'splashes', 'splashers'))

(11, ('i', 'pi', 'pin', 'ping', 'oping', 'coping', 'comping', 'compting', 'competing', 'completing', 'complecting'))

(7, ('j', 'jo', 'joy', 'joky', 'jokey', 'jockey', 'jockeys'))

(9, ('k', 'ki', 'kin', 'akin', 'takin', 'takins', 'takings', 'talkings', 'stalkings'))

(10, ('l', 'la', 'las', 'lass', 'lassi', 'lassis', 'lassies', 'glassies', 'glassines', 'glassiness'))

(10, ('m', 'ma', 'mas', 'mars', 'maras', 'madras', 'madrasa', 'madrassa', 'madrassas', 'madrassahs'))

(11, ('n', 'in', 'pin', 'ping', 'oping', 'coping', 'comping', 'compting', 'competing', 'completing', 'complecting'))

(10, ('o', 'os', 'ose', 'rose', 'rouse', 'rouses', 'arouses', 'carouses', 'carousels', 'carrousels'))

(11, ('p', 'pi', 'pin', 'ping', 'oping', 'coping', 'comping', 'compting', 'competing', 'completing', 'complecting'))

(3, ('q', 'qi', 'qis'))

(10, ('r', 're', 'rue', 'ruse', 'ruses', 'rouses', 'arouses', 'carouses', 'carousels', 'carrousels'))

(10, ('s', 'us', 'use', 'uses', 'ruses', 'rouses', 'arouses', 'carouses', 'carousels', 'carrousels'))

(10, ('t', 'ti', 'tin', 'ting', 'sting', 'sating', 'stating', 'estating', 'restating', 'restarting'))

(10, ('u', 'us', 'use', 'uses', 'ruses', 'rouses', 'arouses', 'carouses', 'carousels', 'carrousels'))

(1, ('v',))

(9, ('w', 'we', 'wae', 'wake', 'wakes', 'wackes', 'wackest', 'wackiest', 'whackiest'))

(8, ('x', 'ax', 'max', 'maxi', 'maxim', 'maxima', 'maximal', 'maximals'))

(8, ('y', 'ye', 'tye', 'stye', 'styed', 'stayed', 'strayed', 'estrayed'))

(8, ('z', 'za', 'zoa', 'zona', 'zonae', 'zonate', 'zonated', 'ozonated'))

Assuming you need to do this repeatedly (or you want the answer for every one of the 26 letters), do it backwards:

Then, to get the chain for a given prefix, simply start with that prefix and repeatedly look it and its extensions up in the dictionary.

Here's the sample Python code:

words = [x.strip().lower() for x in open('/usr/share/dict/words')]

words.sort(key=lambda x:len(x), reverse=True)

word_map = {} # Maps words to (extension, max_len) tuples

for word in words:

if word in word_map:

max_len = word_map[word][1]

else:

max_len = len(word)

for i in range(len(word)):

new_word = word[:i] + word[i+1:]

if new_word not in word_map or word_map[new_word][2] < max_len:

word_map[new_word] = (word, max_len)

# Get a chain for each letter

for term in "abcdefghijklmnopqrstuvwxyz":

chain = [term]

while term in word_map:

term = word_map[term][0]

chain.append(term)

print chain

And its output for each letter of the alphabet:

['a', 'ah', 'bah', 'bach', 'brach', 'branch', 'branchi', 'branchia', 'branchiae', 'branchiate', 'abranchiate']

['b', 'ba', 'bac', 'bach', 'brach', 'branch', 'branchi', 'branchia', 'branchiae', 'branchiate', 'abranchiate']

['c', 'ca', 'cap', 'camp', 'campo', 'campho', 'camphor', 'camphory', 'camphoryl', 'camphoroyl']

['d', 'ad', 'cad', 'card', 'carid', 'carida', 'caridea', 'acaridea', 'acaridean']

['e', 'er', 'ser', 'sere', 'secre', 'secret', 'secreto', 'secretor', 'secretory', 'asecretory']

['f', 'fo', 'fot', 'frot', 'front', 'afront', 'affront', 'affronte', 'affronted']

['g', 'og', 'log', 'logy', 'ology', 'oology', 'noology', 'nosology', 'nostology', 'gnostology']

['h', 'ah', 'bah', 'bach', 'brach', 'branch', 'branchi', 'branchia', 'branchiae', 'branchiate', 'abranchiate']

['i', 'ai', 'lai', 'lain', 'latin', 'lation', 'elation', 'delation', 'dealation', 'dealbation']

['j', 'ju', 'jug', 'juga', 'jugal', 'jugale']

['k', 'ak', 'sak', 'sake', 'stake', 'strake', 'straked', 'streaked']

['l', 'la', 'lai', 'lain', 'latin', 'lation', 'elation', 'delation', 'dealation', 'dealbation']

['m', 'am', 'cam', 'camp', 'campo', 'campho', 'camphor', 'camphory', 'camphoryl', 'camphoroyl']

['n', 'an', 'lan', 'lain', 'latin', 'lation', 'elation', 'delation', 'dealation', 'dealbation']

['o', 'lo', 'loy', 'logy', 'ology', 'oology', 'noology', 'nosology', 'nostology', 'gnostology']

['p', 'pi', 'pig', 'prig', 'sprig', 'spring', 'springy', 'springly', 'sparingly', 'sparringly']

['q']

['r', 'ra', 'rah', 'rach', 'brach', 'branch', 'branchi', 'branchia', 'branchiae', 'branchiate', 'abranchiate']

['s', 'si', 'sig', 'spig', 'sprig', 'spring', 'springy', 'springly', 'sparingly', 'sparringly']

['t', 'ut', 'gut', 'gutt', 'gutte', 'guttle', 'guttule', 'guttulae', 'guttulate', 'eguttulate']

['u', 'ut', 'gut', 'gutt', 'gutte', 'guttle', 'guttule', 'guttulae', 'guttulate', 'eguttulate']

['v', 'vu', 'vum', 'ovum']

['w', 'ow', 'low', 'alow', 'allow', 'hallow', 'shallow', 'shallowy', 'shallowly']

['x', 'ox', 'cox', 'coxa', 'coxal', 'coaxal', 'coaxial', 'conaxial']

['y', 'ly', 'loy', 'logy', 'ology', 'oology', 'noology', 'nosology', 'nostology', 'gnostology']

['z', 'za', 'zar', 'izar', 'izard', 'izzard', 'gizzard']

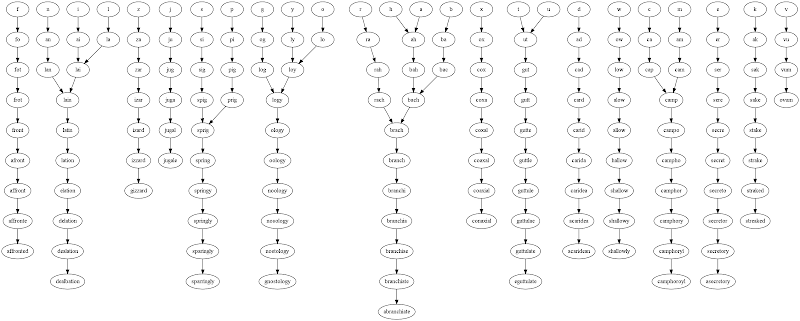

Edit: Given the degree to which branches merge towards the end, I thought it would be interesting to draw a graph to demonstrate this:

An interesting extension of this challenge: It's likely there are several equilength final words for some letters. Which set of chains minimizes the number of final nodes (eg, merges the most letters)?

If you love us? You can donate to us via Paypal or buy me a coffee so we can maintain and grow! Thank you!

Donate Us With