I'm noob at WebGL. I read in several posts of ND-Buffers and G-Buffers as if it were a strategic choice for WebGL development.

How are ND-Buffers and G-Buffers related to rendering pipelines? Are ND-Buffers used only in forward-rendering and G-Buffers only in deferred-rendering?

A JavaScript code example how to implement both would be useful for me to understand the difference.

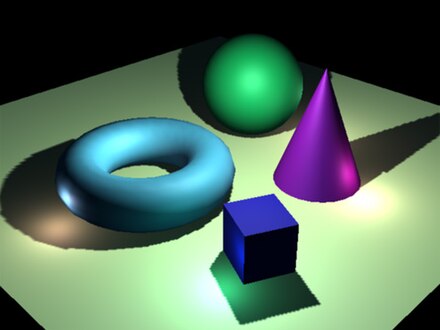

The G-buffer is the collective term of all textures used to store lighting-relevant data for the final lighting pass.

The primary advantage of deferred shading is the decoupling of scene geometry from lighting. Only one geometry pass is required, and each light is only computed for those pixels that it actually affects. This gives the ability to render many lights in a scene without a significant performance hit.

The primary advantage of deferred rendering is that the lighting is only computed for fragments that are visible. Consider a scene with many lights: In forward rendering, each fragment requires a loop over all light sources and for each, an evaluation of the reflectance model.

Visibility buffers [Burns2013] are a flavor of deferred shading. In a way, a visibility buffer is the smallest conceivable G-buffer. In my renderer, its size is 32 bits per pixel. It stores nothing but the index of the visible triangle.

G-Buffers are just a set of buffers generally used in deferred rendering.

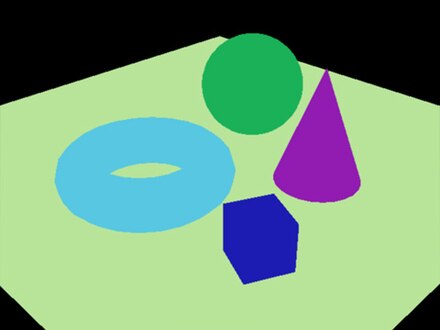

Wikipedia gives a good example of the kind of data often found in a g-buffer

Diffuse color info

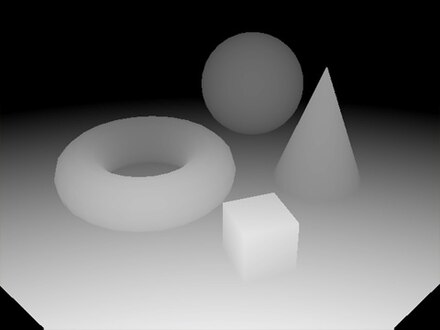

World space or screen space normals

Depth buffer / Z-Buffer

The combination of those 3 buffers is referred to as a "g-buffer"

Generating those 3 buffers from geometry and material data you can then run a shader to combine them to generate the final image.

What actually goes into a g-buffer is up to the particular engine/renderer. For example one of Unity3D's deferred renders contains diffuse color, occlusion, specular color, roughness, normal, depth, stencil, emission, lighting, lightmap, reflection probs.

An ND buffer just stands for "normal depth buffer" which makes it a subset of what's usually found in a typical g-buffer.

As for a sample that's arguably too big for SO but there's an article about deferred rendering in WebGL on MDN

Choosing a rendering path is a major architectural decision for a 3D renderer, no matter what API does it use. That choice's heavily depends upon the set of features the renderer has to support and it's performance requirements.

A substantial set of said features consists of so-called screen-space effects. If means that we render some crucial data about each pixel of the screen to a set of renderbuffers and then using that data (not the geometry) to compute some new data needed for a frame. Ambient Occlusion is a great example of such an effect. Based on some spacial values of pixels we compute a "mask" which we can later use to properly shade each pixel.

Moreover, there is a rendering pass which almost exclusively relies on screen-space computations. And it is indeed Deferred Shading. And that's where G-buffer come in. All data needed to compute colour of a pixel are rendered to a G-buffer: a set of renderbuffers storing that data. The data it self (and hence meanings of G-buffer's renderbuffers) can be different: diffuse component, specular component, shininess, normal, position, depth, etc. And as part of rendering of a frame contemporary deferred shading engines use screen-space ambient occlusion (SSAO), which use data from several G-buffer's renderbuffers (usually, they are position, normal and depth).

About ND-buffers. It seems to me that it's not a widely used term (Google failed to find any relevant info on them besides this question). I believe that ND stands for Normal-Depth. They're just a specific case of a G-buffer for a particular algorithm and effect (in the thesis it's SSAO).

So using G-buffers (and ND-buffers as a subset of G-buffers) and exadepends upon shading algorithms and effects you're implementing. But all screen-space computation will require some form of G-buffer.

P.S. The thesis you've link contains an inaccuracy. Author lists an ability to implement to ND-buffers on GLES 2.0 as an advantage to the method. However it's not actually possible since GLES 2.0 doesn't have depth textures (they've been added in OES_depth_texture extension).

I would like to add some more informations to previvous answers.

I read in several posts of ND-Buffers and G-Buffers as if it were a strategic choice for WebGL development.

One of the most important part of deferred rendering is, if given platform supports MRT (multiple render targets). If it doesn't, you are not able to share partial calculations in shaders between each rendering and it also forces you to run rendnering as many times as you have "layers" (in case of unity 3D, it might be up to 11 times?). This could slow down your program a lot.

Read more in this question Is deferred rendering/shading possible with OpenGL ES 2.0 ?

Webgl doesn't support MRT, but it has extension: https://www.khronos.org/registry/webgl/extensions/WEBGL_draw_buffers/

Also there is an extension for depth textures: https://www.khronos.org/registry/webgl/extensions/WEBGL_depth_texture/

So it should be possible to use deferred rendering technique, but its speed is hard to guess.

If you love us? You can donate to us via Paypal or buy me a coffee so we can maintain and grow! Thank you!

Donate Us With