Is there a unified theory of caching? That is, collections of theorems and algorithms for constructing caches and/or optimizing for them?

The question is deliberately broad, because the results I am looking for are also broad. Formulas for maximum achievable speedup, metrics for caching algorithms, stuff like that. A university-level textbook would probably be ideal.

L1 cache, or primary cache, is extremely fast but relatively small, and is usually embedded in the processor chip as CPU cache. L2 cache, or secondary cache, is often more capacious than L1.

Caching (pronounced “cashing”) is the process of storing data in a cache. A cache is a temporary storage area. For example, the files you automatically request by looking at a Web page are stored on your hard disk in a cache subdirectory under the directory for your browser.

In computing, a cache is a high-speed data storage layer which stores a subset of data, typically transient in nature, so that future requests for that data are served up faster than is possible by accessing the data's primary storage location.

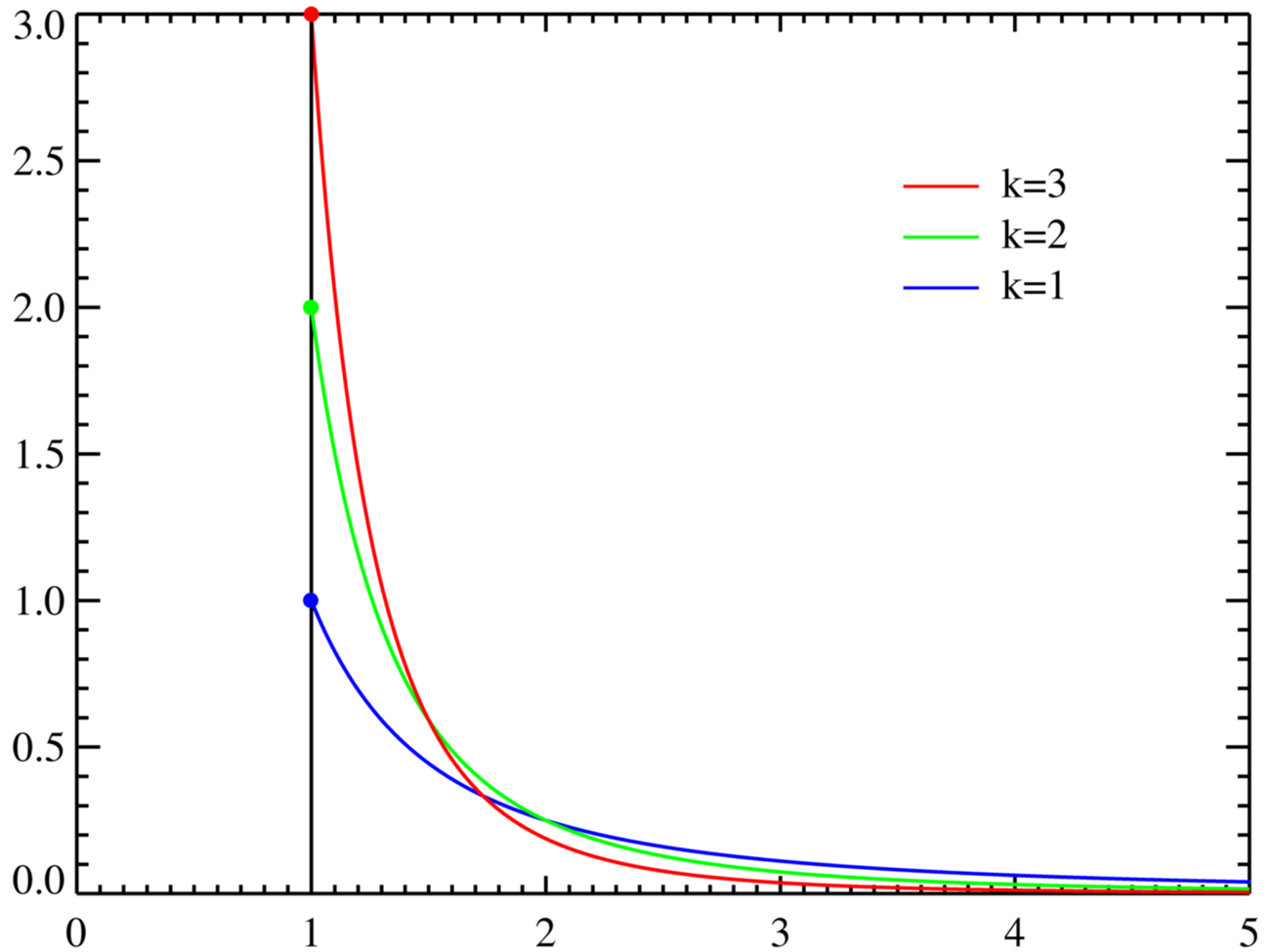

The vast majority of real-world caching involves exploiting the "80-20 rule" or Pareto distribution. See below for how it looks

This manifests itself in applications as:

Therefore, I would say the "theory of caching" is to use up just a few extra resources which are generally "rare" but "fast" to compensate for the most active repeated things you're going to do.

The reason you do this is to try to "level out" the number of times you do the "slow" operation based on the highly skewed chart above.

I talked to one of the professors at my school, who pointed me towards online algorithms, which seems to be the topic I am looking for.

There is a great deal of overlap between caching algorithms and page replacement algorithms. I will probably edit the WikiPedia pages for these topics to clarify the connection, once I have learned more about the subject.

If you love us? You can donate to us via Paypal or buy me a coffee so we can maintain and grow! Thank you!

Donate Us With