I work for a medical lab company. They need to be able to search through all their client data. So far they have a few years in storage about 4 million pieces of paper, and they are adding 10,000 pages per day. For data that is 6 months old, they need to access it about 10-20 times per day. They are deciding whether to spend 80k on a scanning system, and have the secretaries scan everything in house, or whether to hire a company like iron mountain to do this. Iron mountain will charge around 8cents per page, which adds up to around $300k for the amount of paper we have, plus a bunch of more money every day for the 10,000 sheets.

I am thinking that perhaps I can build a database and do all the scanning in house.

FYI none of the answers below answer my questions well enough

Having worked at a medical office doing data entry, OCR will almost certainly not work. Our forms had special text boxes, with a separate box for each letter, and even for that the software was correct only about 75% of the time. There were some forms which allowed freeform writing, but the result was universally gibberish.

I would recommend going the meta-data route; scan everything, but instead of trying to OCR each form, just store it as an image and add meta-data tags.

My thinking is this: the goal of OCR in this case is to enable all forms to be read from the computer, thus making data retrieval simpler. However, you don't really need OCR to do that here, all you need to do is find some way which would allow someone to find a form really fast, and get the right info off the form. As such, even if you store each form as an image, adding the right meta-data tags would allow you to retrieve whatever you need whenever you need it, and the person running the search could either read it right off the stored form, or print it and read it that way.

EDIT: One fairly simple way of executing this plan could be to use a simple database scheme, where each image is stored as a single field. Each row could then contain something like the following, depending on your needs:

Basically, think of all the ways you'd want to search for a given file, and make sure that it's included as a field. Do you look up patients by Patient ID? Include that. Date of visit? Same. If you aren't familiar with designing a database around search requirements, I suggest hiring a developer with database design skills; you can end up with a very powerful, yet quick, database schema which includes everything you want and is powerful enough for your indexing needs. (Bear in mind that much of this will be highly specific to your application. You'll want to optimize this to your situation, and ensure set it up as well as you can at the outset.)

If you do decide to go down the route of doing this 'in-house'. Your design needs to have scalability borne from day 1.

This is one rare case in which the task can be broken down and done in parallel.

If you have 10K documents, even if you built and deployed 10x (scanners + servers + custom app) that would mean each system would only need to handle around 1k documents each.

The challenge would be to make it a cheap and reliable 'turn key solution'.

The application side is probably the easier element, so long as you have a good automated update system designed from the start, you could then simply add hardware as you expand your 'farm/cluster'.

keeping your design modular (i.e. use commodity cheap hardware), will allow you to mix and match hardware/ replace on demand without impacting on daily throughput.

Trial initially to have a turn key solution that can sustain easily 1,000 documents. Then once this works flawlessly scale it up!

Good luck!

Ok here is a more detailed answer to each specific points you have raised:

What are those systems that are used to scan checks and mail and they read really messy hand writing really well?

One such system as used by the mail/post delivery company 'TNT' here in the UK is provided by a Netherlands based company 'Prime Vision' and their HYCR Engine.

I highly suggest you contact them. Handwritten recognition is never going to be very accurate, OCR on printed characters can sometimes achieve 99% accuracy.

has anyone had experience building a database with a bunch of OCR'd searchable documents? What tools should I use for my problem?

Not specifically OCR'd documents, but for one of our clients, I build and maintain a very large and complex EDMS which holds a very large variety of document formats. It is searchable in multiple different ways whith complex set of data permission access.

In terms of giving advice, I'd say a few things to bear in mind:

Each approach has its own set of pro's and con's. We opted to go the first route. In terms of search-ability, once you have the meta data of the actual documents. It is just a matter of creating custom search queries. I built a rank based search, it simply gave a higher ranking to those that matched more of the tokens. Of course you could use of the shelf searching tools (library) such as the Lucene Project.

Can you recommend the best OCR libraries?

yes:

As a programmer, what would you do to solve this problem?

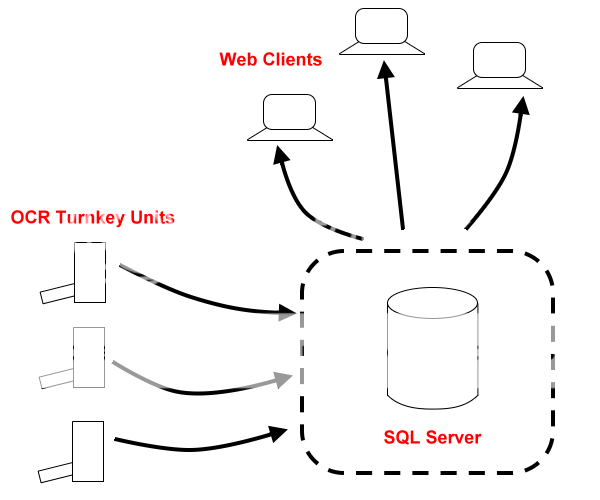

As described above, please see diagram below. The heart of the system will be your Database, you will need to have a presentation front layer to allow clients (could be web application) to search documents in your database. The second part will be the Turnkey based OCR 'servers'.

For these 'OCR Servers' I would simply implement a 'drop folder' (which could be a FTP folder). Your custom application could simply monitor this drop folder (Folder Watcher Class in .NET). Files could be sent directly to this FTP folder.

Your custom OCR application would simply monitor the drop folder and upon receiving a new file, scan it generate the meta data and then move it to a 'Scanned' folder'. The ones that are duplicates or failed to scan can be moved to their own 'Failed Folder'.

The OCR application would then connect to your main Database and do some Inserts or updates (this moves the META DATA to the main database).

In the background you can have your 'Scanned Folder' synchronized with a mirrored folder in your database server (your SQL server as shown in the diagram) (This then physically copies your scanned and OCR'd document to the Main server where the linked records has already been moved.)

Anyway that's how I'd tackle this problem. I've personally implemented one or more of these solutions so I'm confident this would work and be scale-able.

The scale-ability is key here. For this reason you may want to look at alternative database other than the traditional ones.

I would recommend that you at least think about NoSQL type database for this project:

E.g

Un-ashamed Plug:

Of course for £40,000 I'd build and set up the whole solution for you (including hardware) !

:) I'm kidding SO users!

Note the mention of META DATA ,by this I mean the same as others have alluded to. The fact that you should retain the original copy of the scanned as an image file, along with the OCR'd meta data (such that it can allow for text searching).

I thought I make this clear, in case it was assumed that it was not part of my solution.

You are currently solving the wrong problem, and 300K is peanuts, as others have already shown. You should focus on eliminating the 10K pages a day you receive now. The other problem just takes a fixed amount of money.

OCR only works reliably for handwriting in very limited domains (recognizing bank numbers, zip codes). The fine results OCR companies advertize with are of printed computer documents in standard formats and standard fonts.

The data entry should not be on paper. Period. Focus on making it so. Push the problem further upfront.

And yes, this is not a programmer problem. It is a management problem.

If you love us? You can donate to us via Paypal or buy me a coffee so we can maintain and grow! Thank you!

Donate Us With