I'm doing video captioning on MSR-VTT dataset.

In this dataset, I've got 10,000 videos and, for each videos, I've got 20 different captions.

My model consists of a seq2seq RNN. Encoder's inputs are the videos features, decoder's inputs are embedded target captions and decoder's output are predicted captions.

I'm wondering if using several time the same videos with different captions is useful, or not.

Since I couldn't find explicit info, I tried to benchmark it

I trained it on 1108 sport videos, with a batch size of 5, over 60 epochs. This configuration takes about 211 seconds per epochs.

Epoch 1/60 ; Batch loss: 5.185806 ; Batch accuracy: 14.67% ; Test accuracy: 17.64%

Epoch 2/60 ; Batch loss: 4.453338 ; Batch accuracy: 18.51% ; Test accuracy: 20.15%

Epoch 3/60 ; Batch loss: 3.992785 ; Batch accuracy: 21.82% ; Test accuracy: 54.74%

...

Epoch 10/60 ; Batch loss: 2.388662 ; Batch accuracy: 59.83% ; Test accuracy: 58.30%

...

Epoch 20/60 ; Batch loss: 1.228056 ; Batch accuracy: 69.62% ; Test accuracy: 52.13%

...

Epoch 30/60 ; Batch loss: 0.739343; Batch accuracy: 84.27% ; Test accuracy: 51.37%

...

Epoch 40/60 ; Batch loss: 0.563297 ; Batch accuracy: 85.16% ; Test accuracy: 48.61%

...

Epoch 50/60 ; Batch loss: 0.452868 ; Batch accuracy: 87.68% ; Test accuracy: 56.11%

...

Epoch 60/60 ; Batch loss: 0.372100 ; Batch accuracy: 91.29% ; Test accuracy: 57.51%

Then I trained the same 1108 sport videos, with a batch size of 64.

This configuration takes about 470 seconds per epochs.

Since I've 12 captions for each videos, the total number of samples in my dataset is 1108*12.

That's why I took this batch size (64 ~= 12*old_batch_size). So the two models launch the optimizer the same number of times.

Epoch 1/60 ; Batch loss: 5.356736 ; Batch accuracy: 09.00% ; Test accuracy: 20.15%

Epoch 2/60 ; Batch loss: 4.435441 ; Batch accuracy: 14.14% ; Test accuracy: 57.79%

Epoch 3/60 ; Batch loss: 4.070400 ; Batch accuracy: 70.55% ; Test accuracy: 62.52%

...

Epoch 10/60 ; Batch loss: 2.998837 ; Batch accuracy: 74.25% ; Test accuracy: 68.07%

...

Epoch 20/60 ; Batch loss: 2.253024 ; Batch accuracy: 78.94% ; Test accuracy: 65.48%

...

Epoch 30/60 ; Batch loss: 1.805156 ; Batch accuracy: 79.78% ; Test accuracy: 62.09%

...

Epoch 40/60 ; Batch loss: 1.449406 ; Batch accuracy: 82.08% ; Test accuracy: 61.10%

...

Epoch 50/60 ; Batch loss: 1.180308 ; Batch accuracy: 86.08% ; Test accuracy: 65.35%

...

Epoch 60/60 ; Batch loss: 0.989979 ; Batch accuracy: 88.45% ; Test accuracy: 63.45%

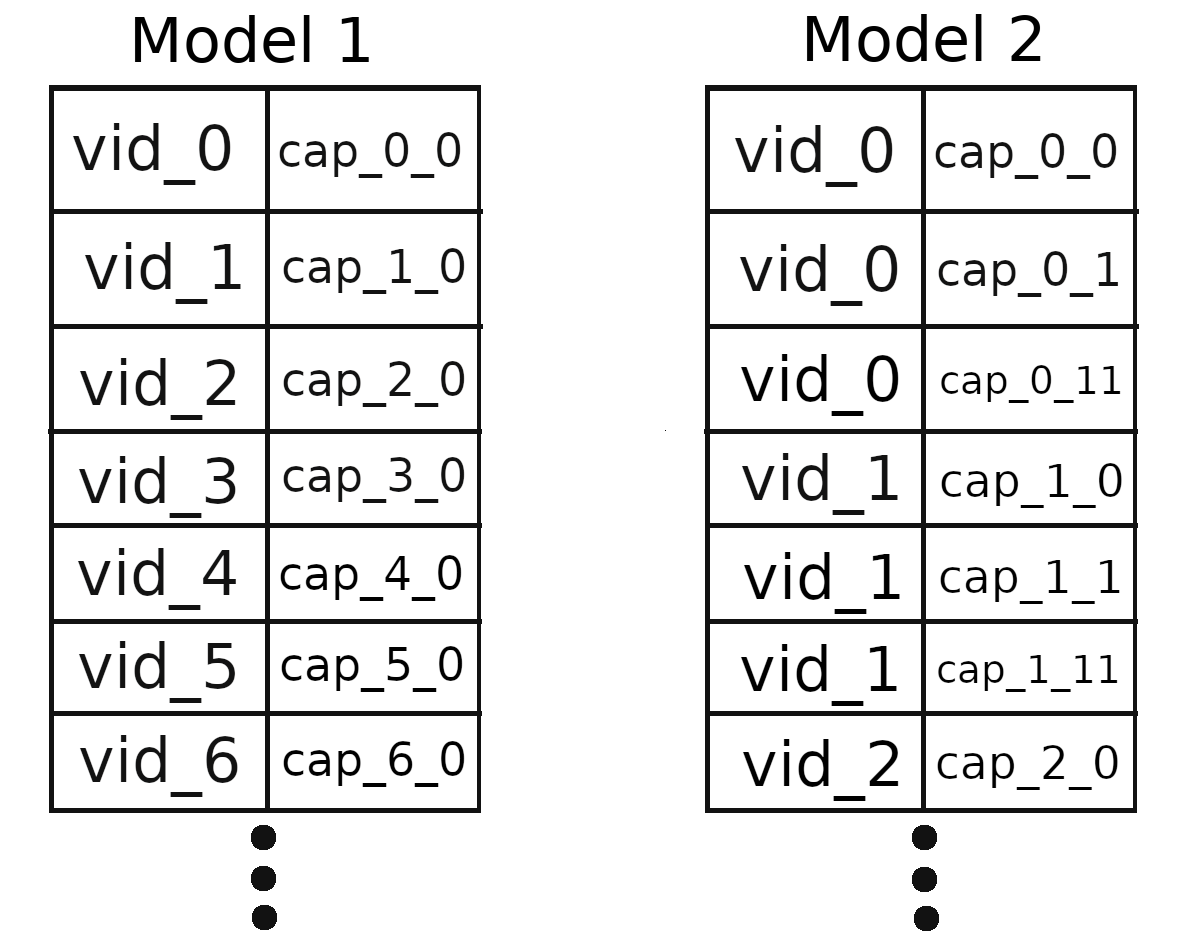

Here is the intuitive representation of my datasets:

When I manually looked at the test predictions, Model 2 predictions looked more accurate than Model 1 ones.

In addition, I used a batch size of 64 for Model 2. That means that I could obtain even more good results by choosing a smaller batch size. It seems I can't have better training method for Mode 1 since batch size is already very low

On the other hand, Model 1 have better loss and training accuracy results...

What should I conclude ?

Does the Model 2 constantly overwrites the previously trained captions with the new ones instead of adding new possible captions ?

A Seq2Seq model is a model that takes a sequence of items (words, letters, time series, etc) and outputs another sequence of items. In the case of Neural Machine Translation, the input is a series of words, and the output is the translated series of words.

The most common architecture used to build Seq2Seq models is Encoder-Decoder architecture. As the name implies, there are two components — an encoder and a decoder.

The seq2seq model is also useful in machine translation applications. What does the seq2seq or encoder-decoder model do in simple words? It predicts a word given in the user input and then each of the next words is predicted using the probability of likelihood of that word to occur.

Sequence-to-Sequence (Seq2Seq) modelling is about training the models that can convert sequences from one domain to sequences of another domain, for example, English to French. This Seq2Seq modelling is performed by the LSTM encoder and decoder.

Not sure if i understand this correctly since i only worked with neural networks like yolo but here is what i understand: You are training a network to caption videos and now you want train several captions per video right? I guess the problem is that you are overwriting your previously trained captions with the new ones instead of adding new possible captions.

You need to train all possible captions from the start, not sure if this is supported with your network architecture though. Getting this to work properly is a bit complex because you would need to compare your output to all possible captions. Also you probably need to use the 20 most likely captions as output instead of just one to get the best possible result. I´m afraid i can´t do more than offering this thought because i wasn´t able to find a good source.

If you love us? You can donate to us via Paypal or buy me a coffee so we can maintain and grow! Thank you!

Donate Us With