I've just finished reading the notes for Stanford's CS231n on CNNs and there is a link to a live demo; however, I am unsure by what "Activations", "Activation Gradients", "Weights" and "Weight Gradients" is referring to in the demo. The below screenshots have been copied from the demo.

Confusion point 1

I'm first confused by what "activations" refers to for the input layer. Based on the notes, I thought that the activation layer refers to the RELU layer in a CNN, which essentially tells the CNN which neurons should be lit up (using the RELU function). I'm not sure how that relates to the input layer as shown below. Furthermore, why are there two images displayed? The first image seems to display the image that is provided to the CNN but I'm unable to distinguish what the second image is displaying.

Confusion point 2

I'm unsure what "activations" and "activation gradients" is displaying here for the same reason as above. I think the "weights" display what the 16 filters in the convolution layer look like but I'm not sure what "Weight Gradients" is supposed to be showing.

Confusion point 3

I think I understand what the "activations" is referring to in the RELU layers. It is displaying the output images of all 16 filters after every value (pixel) of the output image has had the RELU function applied to it hence why each of the 16 images contains pixels that are black (un-activated) or some shade of white (activated). However, I don't understand what "activation gradients" is referring to.

Confusion point 4

Also don't understand what "activation gradients" is referring to here.

I'm hoping that by understanding this demo, I'll understand CNNs a little more

This question is similar to this question, but not quite. Also, here's a link to the ConvNetJS example code with comments (here's a link to the full documentation). You can take a look at the code at the top of the demo page for the code itself.

An activation function is a function that takes in some input and outputs some value based on if it reaches some "threshold" (this is specific for each different activation function). This comes from how neurons work, where they take some electrical input and will only activate if they reach some threshold.

Confusion Point 1: The first set of images show the raw input image (the left colored image) and the right of the two images is the output after going through the activation functions. You shouldn't really be able to interpret the second image because it is going through non-linear and perceived random non-linear transformations through the network.

Confusion Point 2: Similar to the previous point, the "activations" are the functions the image pixel information is passed into. A gradient is essentially the slope of the activation function. It appears more sparse (i.e., colors show up in only certain places) because it shows possible areas in the image that each node is focusing on. For example, the 6th image on the first row has some color in the bottom left corner; this may indicate a large change in the activation function to indicate something interesting in this area. This article may clear up some confusion on weights and activation functions. And this article has some really great visuals on what each step is doing.

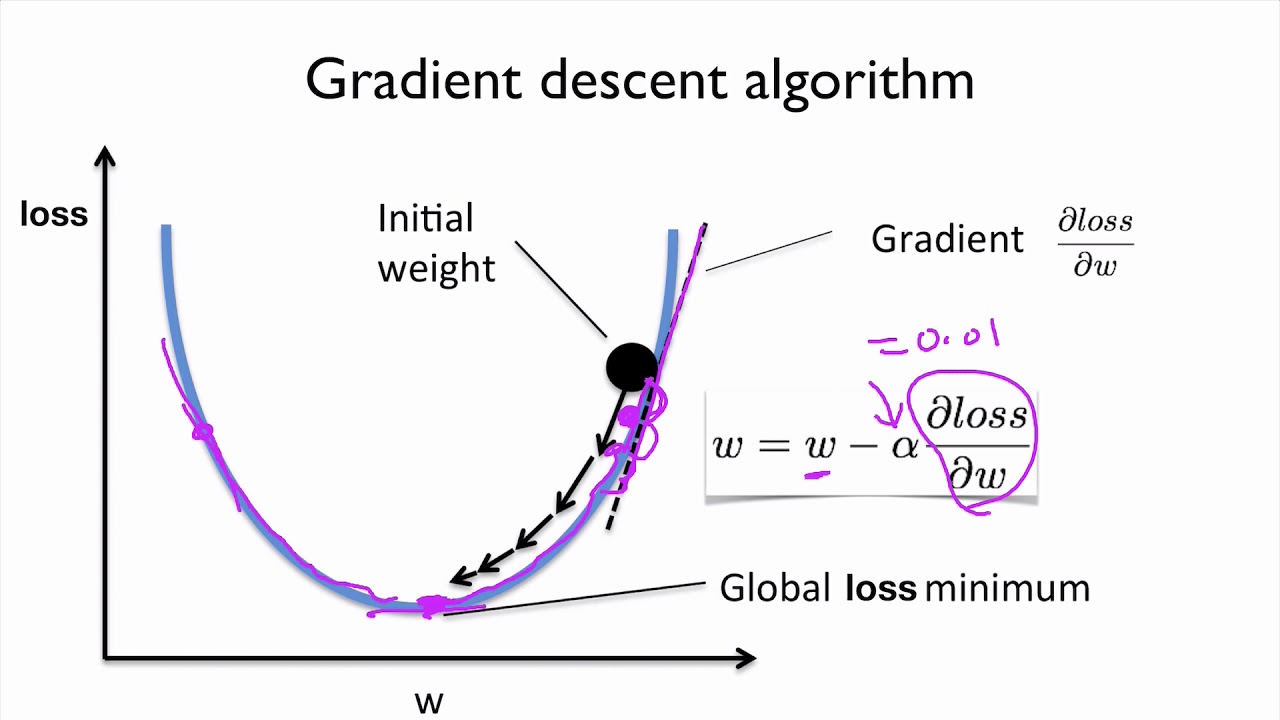

Confusion Point 3: This confused me at first, because if you think about a ReLu function, you will see that it has a slope of one for positive x and 0 everywhere else. So to take the gradient (or slope) of the activation function (ReLu in this case) doesn't make sense. The "max activation" and "min activation" values make sense for a ReLu: the minimum value will be zero and the max is whatever the maximum value is. This is straight from the definition of a ReLu. To explain the gradient values, I suspect that some Gaussian noise and a bias term of 0.1 has been added to those values. Edit: the gradient refers to the slope of the cost-weight curve shown below. The y-axis is the loss value or the calculated error using the weight values w on the x-axis.

Image source https://i.ytimg.com/vi/b4Vyma9wPHo/maxresdefault.jpg

Confusion Point 4: See above.

If you love us? You can donate to us via Paypal or buy me a coffee so we can maintain and grow! Thank you!

Donate Us With