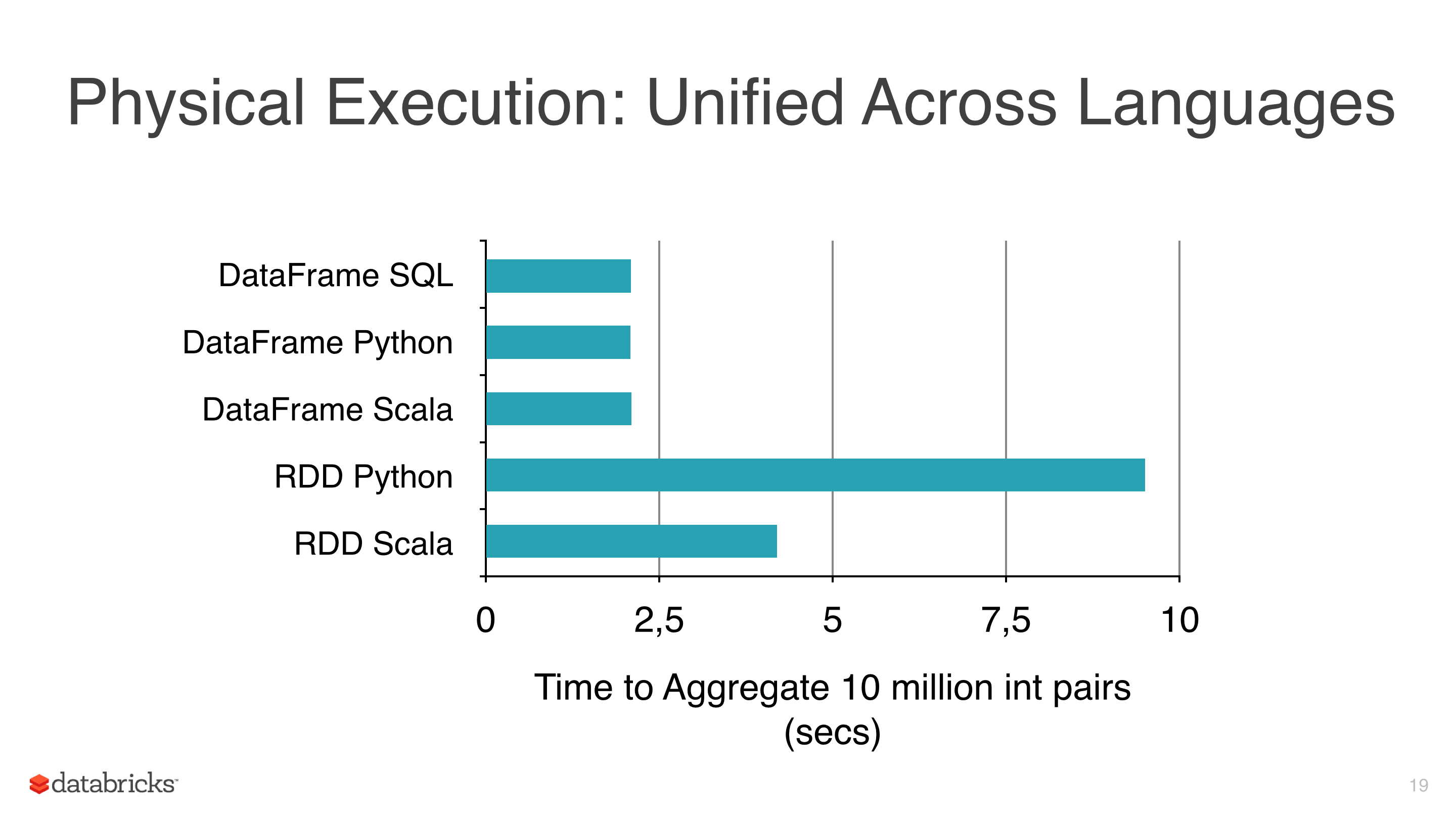

I was applying some Machine Learning algorithms like Linear Regression, Logistic Regression, and Naive Bayes to some data, but I was trying to avoid using RDDs and start using DataFrames because the RDDs are slower than Dataframes under pyspark (see pic 1).

The other reason why I am using DataFrames is because the ml library has a class very useful to tune models which is CrossValidator this class returns a model after fitting it, obviously this method has to test several scenarios, and after that returns a fitted model (with the best combinations of parameters).

The cluster I use isn't so large and the data is pretty big and some fitting take hours so I want to save this models to reuse them later, but I haven't realized how, is there something I am ignoring?

Notes:

#1 Pickle. Pickle is one of the most popular ways to serialize objects in Python; You can use Pickle to serialize your trained machine learning model and save it to a file. At a later time or in another script, you can deserialize the file to access the trained model and use it to make predictions.

To save the model all we need to do is pass the model object into the dump() function of Pickle. This will serialize the object and convert it into a “byte stream” that we can save as a file called model. pkl .

At first glance all Transformers and Estimators implement MLWritable with the following interface:

def write: MLWriter def save(path: String): Unit and MLReadable with the following interface

def read: MLReader[T] def load(path: String): T This means that you can use save method to write model to disk, for example

import org.apache.spark.ml.PipelineModel val model: PipelineModel model.save("/path/to/model") and read it later:

val reloadedModel: PipelineModel = PipelineModel.load("/path/to/model") Equivalent methods are also implemented in PySpark with MLWritable / JavaMLWritable and MLReadable / JavaMLReadable respectively:

from pyspark.ml import Pipeline, PipelineModel model = Pipeline(...).fit(df) model.save("/path/to/model") reloaded_model = PipelineModel.load("/path/to/model") SparkR provides write.ml / read.ml functions, but as of today, these are not compatible with other supported languages - SPARK-15572.

Note that the loader class has to match the class of the stored PipelineStage. For example if you saved LogisticRegressionModel you should use LogisticRegressionModel.load not LogisticRegression.load.

If you use Spark <= 1.6.0 and experience some issues with model saving I would suggest switching version.

Additionally to the Spark specific methods there is a growing number of libraries designed to save and load Spark ML models using Spark independent methods. See for example How to serve a Spark MLlib model?.

Since Spark 1.6 it's possible to save your models using the save method. Because almost every model implements the MLWritable interface. For example, LinearRegressionModel has it, and therefore it's possible to save your model to the desired path using it.

I believe you're making incorrect assumptions here.

Some operations on a DataFrames can be optimized and it translates to improved performance compared to plain RDDs. DataFrames provide efficient caching and SQLish API is arguably easier to comprehend than RDD API.

ML Pipelines are extremely useful and tools like cross-validator or different evaluators are simply must-have in any machine pipeline and even if none of the above is particularly hard do implement on top of low level MLlib API it is much better to have ready to use, universal and relatively well tested solution.

So far so good, but there are a few problems:

DataFrames like select or withColumn display similar performance to its RDD equivalents like map,ml.classification.NaiveBayes are simply wrappers around its mllib API,I believe that at the end of the day what you get by using ML over MLLib is quite elegant, high level API. One thing you can do is to combine both to create a custom multi-step pipeline:

MLLib algorithm,MLLib model using a method of your choice (Spark model or PMML)It is not an optimal solution, but is the best one I can think of given a current API.

It seems as if the API functionality to save a model is not implemented as of today (see Spark issue tracker SPARK-6725).

An alternative was posted (How to save models from ML Pipeline to S3 or HDFS?) which involves simply serializing the model, but is a Java approach. I expect that in PySpark you could do something similar, i.e. pickle the model to write to disk.

If you love us? You can donate to us via Paypal or buy me a coffee so we can maintain and grow! Thank you!

Donate Us With