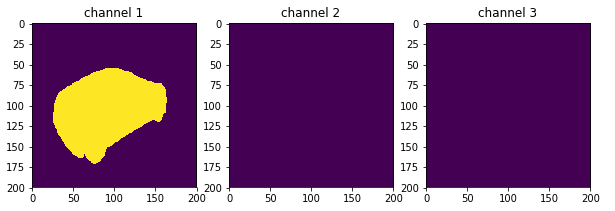

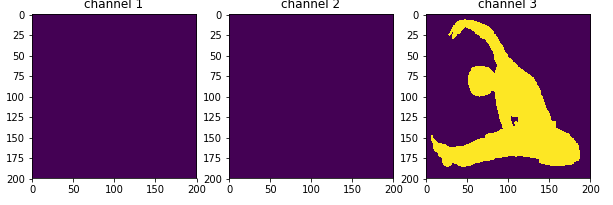

I am trying to implement a multiclass semantic segmentation model with 2 classes ( human, car). here is my modified implementation of unet architecture. I number of output channels to 3 (3 classes - human, car, background). How do i get pixel wise classification? here are 2 examples from my ground truth masks.

i am using 1 channel for each object class ie.

def conv_block(tensor, nfilters, size=3, padding='same', initializer="he_normal"):

x = Conv2D(filters=nfilters, kernel_size=(size, size), padding=padding, kernel_initializer=initializer)(tensor)

x = BatchNormalization()(x)

x = Activation("relu")(x)

x = Conv2D(filters=nfilters, kernel_size=(size, size), padding=padding, kernel_initializer=initializer)(x)

x = BatchNormalization()(x)

x = Activation("relu")(x)

return x

def deconv_block(tensor, residual, nfilters, size=3, padding='same', strides=(2, 2)):

y = Conv2DTranspose(nfilters, kernel_size=(size, size), strides=strides, padding=padding)(tensor)

y = concatenate([y, residual], axis=3)

y = conv_block(y, nfilters)

return y

def Unet(img_height, img_width, nclasses=3, filters=64):

input_layer = Input(shape=(img_height, img_width, 3), name='image_input')

conv1 = conv_block(input_layer, nfilters=filters)

conv1_out = MaxPooling2D(pool_size=(2, 2))(conv1)

conv2 = conv_block(conv1_out, nfilters=filters*2)

conv2_out = MaxPooling2D(pool_size=(2, 2))(conv2)

conv3 = conv_block(conv2_out, nfilters=filters*4)

conv3_out = MaxPooling2D(pool_size=(2, 2))(conv3)

conv4 = conv_block(conv3_out, nfilters=filters*8)

conv4_out = MaxPooling2D(pool_size=(2, 2))(conv4)

conv4_out = Dropout(0.5)(conv4_out)

conv5 = conv_block(conv4_out, nfilters=filters*16)

conv5 = Dropout(0.5)(conv5)

deconv6 = deconv_block(conv5, residual=conv4, nfilters=filters*8)

deconv6 = Dropout(0.5)(deconv6)

deconv7 = deconv_block(deconv6, residual=conv3, nfilters=filters*4)

deconv7 = Dropout(0.5)(deconv7)

deconv8 = deconv_block(deconv7, residual=conv2, nfilters=filters*2)

deconv9 = deconv_block(deconv8, residual=conv1, nfilters=filters)

output_layer = Conv2D(filters=nclasses, kernel_size=(1, 1))(deconv9)

output_layer = BatchNormalization()(output_layer)

output_layer = Reshape((img_height*img_width, nclasses), input_shape=(img_height, img_width, nclasses))(output_layer)

output_layer = Activation('softmax')(output_layer)

model = Model(inputs=input_layer, outputs=output_layer, name='Unet')

return model

You are almost done, now backpropagate the network error with:

loss = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(logits=output_layer, labels=labels))

tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(loss)

You don't have to convert your ground truth into the one-hot format, sparse_softmax will dot it for you.

If you love us? You can donate to us via Paypal or buy me a coffee so we can maintain and grow! Thank you!

Donate Us With