I’m a bit confused about how new MapReduce2 applications should be developed to work with YARN and what happen with the old ones.

I currently have MapReduce1 applications which basically consist in:

From one side I see that applications coded in MapReduce1 are compatible in MapReduce2 / YARN, with a few caveats, just recompiling with new CDH5 libraries (I work with Cloudera distribution).

But from other side I see information about writing YARN applications in a different way than MapReduce ones (using YarnClient, ApplicationMaster, etc):

http://hadoop.apache.org/docs/r2.7.0/hadoop-yarn/hadoop-yarn-site/WritingYarnApplications.html

But for me, YARN is just the architecture and how the cluster manage your MR app.

My questions are:

YARN applications including MapReduce applications?YARN application, forgetting drivers

and creating Yarn clients, ApplicationMasters and so on?MapReduce1 (recompiled with MR2 libraries) jobs managed by YARN

in the same way that YARN applications? MapReduce1 applications and YARN applications regarding the way in which YARN will manage them internally?Thanks in advance

HADOOP Version 1

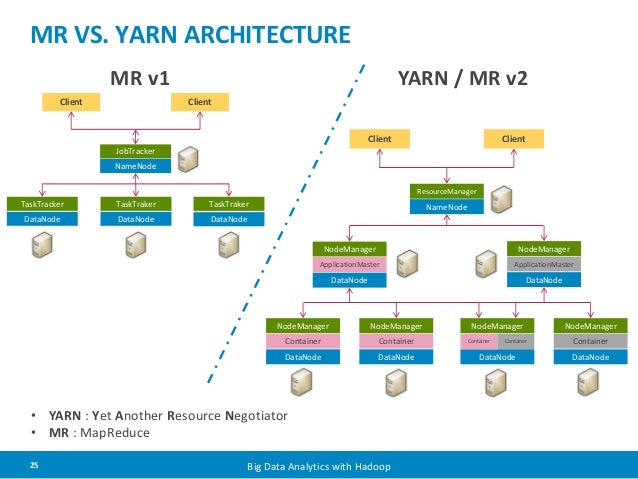

The JobTracker is responsible for resource management---managing the slave nodes--- major functions involve

Issues with Hadoop v1 JobTracker is responsible for all spawned MR applications, it is a single point of failure---If JobTracker goes down, all applications in the cluster are killed. Moreover, if the cluster has a large number of applications, JobTracker becomes the performance bottleneck, to address the issues of scalability and job management Hadoop v2 was released.

Hadoop v2

The fundamental idea of YARN is to split the two major responsibilities of the Job-Tracker—that is, resource management and job scheduling/monitoring—into separate daemons: a global ResourceManager and a per-application ApplicationMaster (AM). The ResourceManager and per-node slave, the NodeManager (NM), form the new, and generic, operating system for managing applications in a distributed manner.

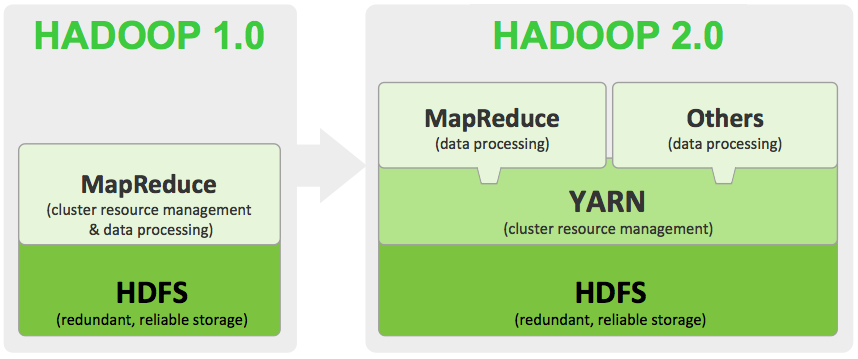

To interact with the new resourceManagement and Scheduling, A Hadoop YARN mapReduce Application is developed---MRv2 has nothing to do with the mapReduce programming API

Application programmers will see no difference between MRv1 and MRv2, MRv2 is fully backward compatible---Yes a MR application(.jar), can be run on both the frameworks without any change in code.

The Hadoop 2.x already contains the code for MR Client and AppMaster, the programmer just needs to focus on their MapReduce Applications.

MapReduce was previously integrated in Hadoop Core---the only API to interact with data in HDFS. Now In Hadoop v2 it runs as a separate Application, Hadoop v2 allows other application programming frameworks---e.g MPI---to process HDFS data.

Refer to Apache documentation page on YARN architecture and related SE posts:

Hadoop gen1 vs Hadoop gen2

Are YARN applications including MapReduce applications?

YARN support Mapreduce applications. It also runs Spark jobs unlike in Hadoop 1.x.

Should I write my code like a YARN application, forgetting drivers and creating Yarn clients, ApplicationMasters and so on?

Yes. You should forget about all these application components and write your application. Have a look at sample code

Can I still develop the client classes with drivers + job settings? ¿Are MapReduce1 (recompiled with MR2 libraries) jobs managed by YARN in the same way that YARN applications?

Yes. You can do. But look at this compatibility article.

What differences are between MapReduce1 applications and YARN applications regarding the way in which YARN will manage them internally?

Refer to this SE post:

What additional benefit does Yarn bring to the existing map reduce?

If you love us? You can donate to us via Paypal or buy me a coffee so we can maintain and grow! Thank you!

Donate Us With