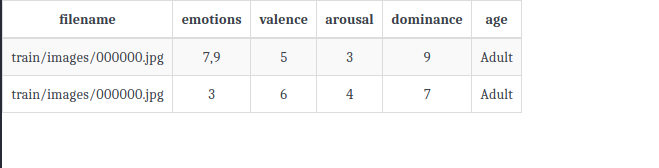

Following issue #10120, I am using the Keras functional API to build a model with multiple (five) outputs and the same input, in order to simultaneously predict different properties of the data (images in my case). All the metadata of the dataset are stored in different CSV files (one for training, one for validation and one for test data).

I have already written code to parse the CSV and save all different annotations into different numpy arrays (x_train.npy, emotions.npy etc.) which later I am loading in order to train my CNN.

First, what is the most efficient way to save the parsed annotations in order to load them afterwards?

Is it better to read the annotations on the fly from the CSV file instead of saving them to numpy (or any other format)?

When I load the saved numpy arrays (the following example contains only images and a single metadata)

(x_train, y_train),(x_val, y_val)

then I do

train_generator = datagen.flow(x_train, y_train, batch_size=32)

and finally,

history = model.fit_generator(train_generator,

epochs=nb_of_epochs,

steps_per_epoch= steps_per_epoch,

validation_data=val_generator,

validation_steps=validation_steps,

callbacks=callbacks_list)

My program seems to consume up to 20-25GB of RAM for the whole duration of the training process (which is done on GPU). In case I add more than one output my program crashes because of that memory leak (max RAM I've got is 32GB).

What will be a correct approach for loading the parsed annotations alongside the raw images ?

Let's say the above issue is fixed, what will be a correct approach to make use of ImageDataGenerator for multiple outputs like the following (discussed here as well)

Keras: How to use fit_generator with multiple outputs of different type

Xi[0], [Yi1[1], Yi2[1],Yi3[1], Yi4[1],Yi5[1]]

def multi_output_generator(hdf5_file, nb_data, batch_size):

""" Generates batches of tensor image data in form of ==> x, [y1, y2, y3, y4, y5] for use in a multi-output Keras model.

# Arguments

hdf5_file: the hdf5 file which contains the images and the annotations.

nb_data: total number of samples saved in the array.

batch_size: size of the batch to generate tensor image data for.

# Returns

A five-output generator.

"""

batches_list = list(range(int(ceil(float(nb_data) / batch_size))))

while True:

# loop over batches

for n, i in enumerate(batches_list):

i_s = i * batch_size # index of the first image in this batch

i_e = min([(i + 1) * batch_size, nb_data]) # index of the last image in this batch

x = hdf5_file["x_train"][i_s:i_e, ...]

# read labels

y1 = hdf5_file["y1"][i_s:i_e]

y2 = hdf5_file["y2"][i_s:i_e]

y3 = hdf5_file["y3"][i_s:i_e]

y4 = hdf5_file["y4"][i_s:i_e]

y5 = hdf5_file["y5"][i_s:i_e]

yield x, [y1, y2, y3, y4 ,y5]

As I mentioned in my comment, if the whole training data does not fit in memory, you need to write a custom generator (or use the built-in ImageDataGenerator, but in your particular scenario it is of no use or at least a bit difficult to make it work).

Here is the custom generator I have written (you need to fill in the necessary parts):

import numpy as np

from keras.preprocessing import image

def generator(csv_path, batch_size, img_height, img_width, channels, augment=False):

########################################################################

# The code for parsing the CSV (or loading the data files) should goes here

# We assume there should be two arrays after this:

# img_path --> contains the path of images

# annotations ---> contains the parsed annotaions

########################################################################

n_samples = len(img_path)

batch_img = np.zeros((batch_size, img_width, img_height, channels))

idx = 0

while True:

batch_img_path = img_path[idx:idx+batch_size]

for i, p in zip(range(batch_size), batch_img_path):

img = image.load_img(p, target_size=(img_height, img_width))

img = image.img_to_array(img)

batch_img[i] = img

if augment:

############################################################

# Here you can feed the batch_img to an instance of

# ImageDataGenerator if you would like to augment the images.

# Note that you need to generate images using that instance as well

############################################################

# Here we assume that the each column in annotations array

# corresponds to one of the outputs of our neural net

# i.e. annotations[:,0] to output1, annotations[:,1] to output2, etc.

target = annotations[idx:idx+batch_size]

batch_target = []

for i in range(annotations.shape[1]):

batch_target.append(target[:,i])

idx += batch_size

if idx > n_samples - batch_size:

idx = 0

yield batch_img, batch_target

train_gen = generator(train_csv_path, train_batch_size, 256, 256, 3)

val_gen = generator(val_csv_path, val_batch_size, 256, 256, 3)

test_gen = generator(test_csv_path, test_batch_size, 256, 256, 3)

model.fit_generator(train_gen,

steps_per_epoch= , # should be set to num_train_samples / train_batch_size

epochs= , # your desired number of epochs,

validation_data= val_gen,

validation_steps= # should be set to num_val_samples / val_batch_size)

Since I don't know exactly the format and types of the annotations, you may need to make changes to this code to make it work for you. Additionally, considering the current way the idx is updated, if n_sample is not divisible by batch_size, some of the samples at the end may not be used at all. So it can be done much better. One quick fix would be:

idx += batch_size

if idx == n_samples:

idx = 0

else if idx > n_samples - batch_size:

idx = n_samples - batch_size

However, not matter how you update the idx, if you use fit_generator and given that n_samples is not divisible by batch_size, then in each epoch either some of the samples may not be generated or some of the samples may be generated more than once depending on the value of steps_per_epoch argument (which I think may not be a significant issue).

If you love us? You can donate to us via Paypal or buy me a coffee so we can maintain and grow! Thank you!

Donate Us With