I have an HDF5 file that contains a 2D table with column names. It shows up as such in HDFView when I loot at this object, called results.

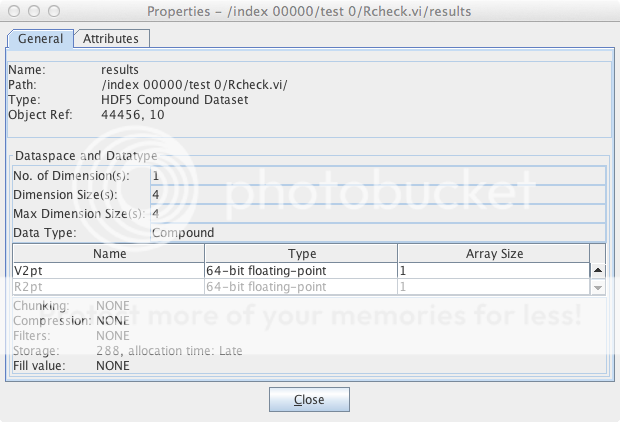

It turns out that results is a "compound Dataset", a one-dimensional array where each element is a row. Here are its properties as displayed by HDFView:

I can get a handle of this object, let's call it res.

The column names are V2pt, R2pt, etc.

I can read the entire array as data, and I can read one element with

res[0,...,"V2pt"].

This will return the number in the first row of column V2pt. Replacing 0 with 1 will return the second row value, etc.

That works if I know the colunm name a priori. But I don't.

I simply want to get the whole Dataset and its column names. How can I do that?

I see that there is a get_field_info function in the HDF5 documentation in the HDF5 documentation, but I find not such function in h5py.

Am I screwed?

Even better would be a solution to read this table as a pandas DataFrame...

This is pretty easy to do in h5py and works just like compound types in Numpy.

If res is a handle to your dataset, res.dtype.fields.keys() will return a

list of all the field names.

If you need to know a specific dtype, something like res.dtype.fields['V2pt'] will give it.

If you love us? You can donate to us via Paypal or buy me a coffee so we can maintain and grow! Thank you!

Donate Us With