UPDATE: This question is outdated, please disregard

So.. my idea is to load a full manga/comics at once, with a progress bar included, and make sort of a stream, like:

XTML and HTML5 is acceptable

What is the fastest way to load a series of images for my website?

EDIT Since @Oded comment.. the question is truly what is the best tech for loading images and the user don't have to wait everytime is turns the 'page'. Targeting a more similar experience like when you read comics in real life.

EDIT2 As some people helped me realize, I'm looking for a pre-loader on steroids

EDIT3 No css techs will do

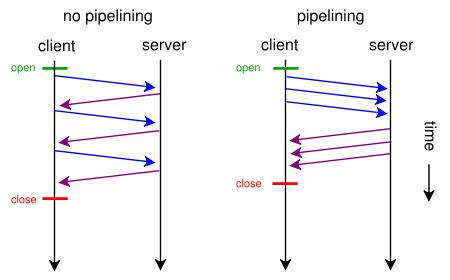

If you split large images into smaller parts, they'll load faster on modern browsers due to pipelining.

ALTERNATIVE: Is there a way to load a compresses file with all imgs and uncompress at the browser?

Image formats are already compressed. You would gain nothing by stitching and trying to further compress them.

You can just stick the images together and use background-position to display different parts of them: this is called ‘spriting’. But spriting's mostly useful for smaller images, to cut down the number of HTTP requests to the server and somewhat reduce latency; for larger images like manga pages the benefit is not so large, possibly outweighed by the need to fetch one giant image all at once even if the user is only going to read the first few pages.

ALTERNATIVE: I was also thinking of saving then as strings and then decode

What would that achieve? Transferring as string would, in most cases, be considerably slower than raw binary. Then to get them from JavaScript strings into images you'd have to use data: URLs, which don't work in IE6-IE7, and are limited to how much data you can put in them. Again, this is meant primarily for small images.

I think all you really want is a bog-standard image preloader.

You could preload the images in javascript using:

var x = new Image();

x.src = "someurl";

This would work like the one you described as "saving the image in strings".

If you love us? You can donate to us via Paypal or buy me a coffee so we can maintain and grow! Thank you!

Donate Us With