I'm interested in Peer-to-Peer connections in the browser. Since this seems to be possible with WebRTC, I'm wondering how it works exaclty.

I've read some explanations and saw diagrams about it and now it's clear to me, that the connection establishmet works over the server. The server seems to exchange some data between the client that are willing to connect to each other, so that they can start a direct connection, that is independent of the server.

But that's exaclty what I don't understand. Until now, I thought the only way to create connections is to listen on a port on computer A and connect to that port from computer B. But this does not seem to be the case in WebRTC. I think none of the clients starts to listen on a port. Somehow, they can create a connection without listening on ports and accepting connections. Neither client A, nor client B starts acting as a server.

But how? What data is exchanged over the WebRTC server, that the clients can use to connect to each other?

Thanks for your explanations for this :)

Edit

I found this article. It's not related to WebRTC, but I think it answers a part of my question. I'm not sure, tough. It still would be cool, if someone could explain it to me and give me some additional links.

The "WebRTC" (Web Real-Time Communication) is an open-source protocol that allows real-time communication and data sharing between different browsers and devices. It allows sharing of voice, video, and data over the web. It is a protocol that sets two-way communication between two browsers in real-time.

Unlike all other browser communication which use Transmission Control Protocol (TCP), WebRTC transports its data over User Datagram Protocol (UDP). The requirement for timeliness over reliability is the primary reason why the UDP protocol is a preferred transport for delivery of real-time data.

WebRTC gives SDP Offer to the client JS app to send (however the JS app wants) to the other device, which uses that to generate an SDP Answer.

The trick is that the SDP includes ICE candidates (effectively "try to talk to me at this IP address and this port"). ICE works to punch open ports in the firewalls; though if both sides are symmetric NATs it won't be possible generally, and an alternative candidate (on a TURN server) can be used.

Once they're talking directly (or via TURN, which is effectively a packet-mirror), they can open a DTLS connection and use it to key the SRTP-DTLS media streams, and to send DataChannels over DTLS.

Edit: Acronyms here: http://blog.1click.io/10-jargons-abbreviations-for-webrtc-fans/ for the rest, there is Google. Most of these are defined by the IETF (http://ietf.org/)

Edit 2: Firefox and Chrome (and the spec) have moved to using "trickle" for ICE candidates, so the ICE candidates are generally added after-the-face to the PeerConnection and exchanged independently of the initial SDP (though you can wait until the initial candidates are ready before sending an offer, and bundle them together). See https://webrtcglossary.com/trickle-ice/ and https://datatracker.ietf.org/doc/draft-ietf-ice-trickle/

This document provides a quick and abstract introduction to WebRTC. In order to get more information about WebRTC please look at the Further Reading section at the end of this document.

WebRTC(Web Real-Time Communication) is a set of technologies that is developed for peer to peer duplex real-time communication between browsers. As its name mentions it is compatible with Web and it is a standard in W3C One of the important feature of WebRTC is that it works even behind NAT addresses.

WebRTC uses several technologies to provide real-time peer to peer communication between browsers. These technologies are

There is one more thing which is Signalling Server is needed for running WebRTC. However, there is no defined standart in implementing signalling server. Each implementation creates its own style. There will give some more information about Signalling Server later in this section.

Let's give some quick info about technologies above.

SDP is a simple protocol and it is used for which codecs are supported in browsers. For instance, assume that there are two peers(Client A and Client B) which will be connected through WebRTC. Client A and Client B create SDP strings that defines which codecs they support. For example, Client A may support H264, VP8 and VP9 codecs for video, Opus and PCM codecs for audio. Client B may support only H264 for video and only Opus codec for audio. For this case, the codecs that will be used between Client A and Client B are H264 and Opus. If there are no common codecs between peers, peer to peer communication cannot be established.

You may have a question about how these SDP strings are sent between each others. This is where Signalling Server takes place.

ICE is the magic that establishes connection between peers even if they are behind NAT. Let's assume again Client A and Client B will get connected and take a look at how ICE is used for that.

Client A finds out their local address and public Internet address by using STUN server and sends these address to Client B through Signalling Server. Each addresses received from STUN server is called ICE candidate

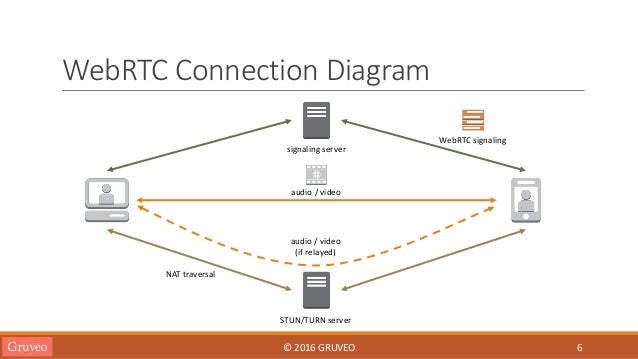

In the image above, there are two servers. One of them is STUN and other of them is TURN server.

STUN server is used to let Client A learn its all addresses. Let me give an example for this, our computers generally has one local address in the 192.168.0.0 network and there is a second address we see when we connect to www.whatismyip.com, this IP address is actually the Public IP address of our Internet Gateway(modem, router, etc.) so let's define STUN server; STUN servers lets peers know theirs Public and Local IP addresses. Btw, Google provides free STUN server(stun.l.google.com:19302).

There is a one more server, TURN Server, in the image. TURN Server is used when peer to peer connection cannot be established between peers. TURN server just relays the data between peers.

Client B does the same, gets local and public IP addresses from STUN server and sends these addresses to Client A through Signalling Server.

Client A receives Client B's addresses and tries each IP addresses by sending special pings in order to create connection with Client B. If Client A receives response from any IP addresses, it puts that address in a list with its response time and other performance credentials. At last Client A choose the best addresses according to its performance.

Client B does the same in order to connect to Client A

RTP is a mature protocol for transmitting real-time data. It is based on UDP. Audio and Video are transmitted with RTP in WebRTC. There is a sister protocol of RTP which name is RTCP(Real-time Control Protocol) which provides QoS in RTP communication. RTP is also used in RTSP(Real-time Streaming Protocol)

The last part is the Signalling Server which is not defined in WebRTC. As mentioned above, Signalling Server is used to send SDP strings and ICE Candidates between Client A and Client B. Signalling Server also decides which peers get connected to each other. WebSocket technology is generally used in Signalling Servers for communication.

In the last one year, all browsers including Safari, Edge have released new versions supporting WebRTC. Chrome, Firefox and Opera have already supported WebRTC for a while. The video codec that is common to browsers are H264. For the audio, Opus is common in browsers. PCM can also be used for audio codec but AAC is not used even if AAC is supported in all browsers because of licensing issues. IP Cameras generally support H264 for video codec and PCM or AAC for audio codec.

Btw, I am developer at Ant Media Server which supports scalable one-to-many WebRTC and peer to peer WebRTC connection

If you love us? You can donate to us via Paypal or buy me a coffee so we can maintain and grow! Thank you!

Donate Us With