I am trying to implement a neural network in Javascript and the specifications of my project would prefer the implementation to have separate objects for each node and layer. I am rather new at programming neural networks, and I have run into a few snags during the back propagation training of the network. I can't seem to find an explanation for why the back propagation algorithm doesn't train the network properly for each training epoch.

I have followed tutorials on a few sites, making sure to follow as closely as possible:

http://galaxy.agh.edu.pl/~vlsi/AI/backp_t_en/backprop.html

Here is a link to the original code: http://jsfiddle.net/Wkrgu/5/

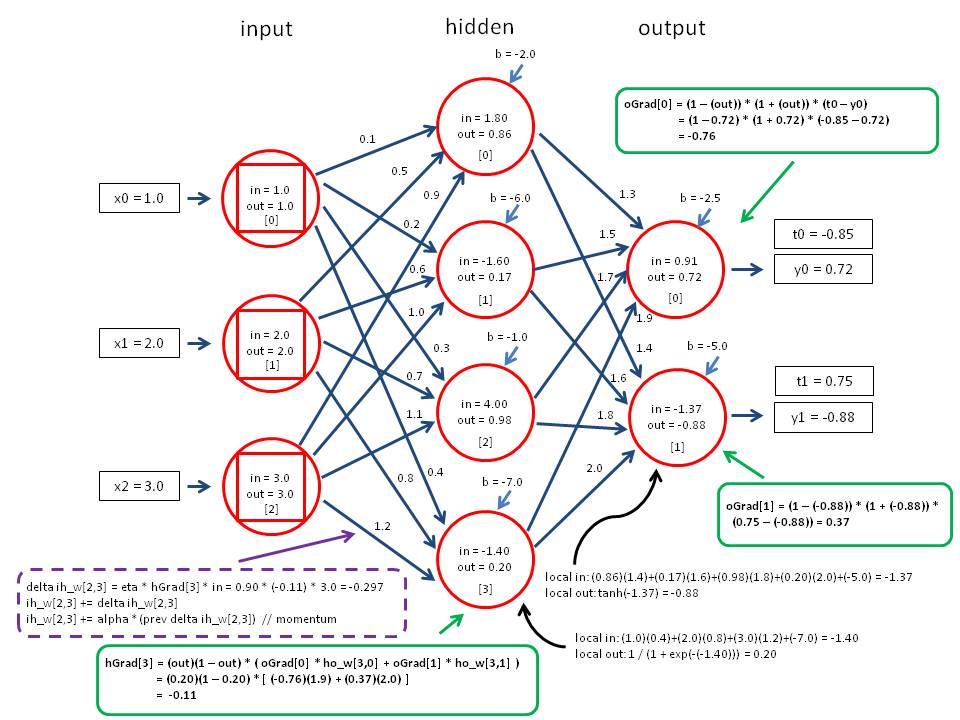

Here is what I am trying to do, and as far as I can tell, here is what I can interpret is happening: After calculating the derivative values and the error for each node/neuron, I am implementing this function:

// Once all gradients are calculated, work forward and calculate

// the new weights. w = w + (lr * df/de * in)

for(i = 0; i < this._layers.length; i++) {

// For each neuron in each layer, ...

for(j = 0; j < this._layers[i]._neurons.length; j++) {

neuron = this._layers[i]._neurons[j];

// Modify the bias.

neuron.bias += this.options.learningRate * neuron.gradient;

// For each weight, ...

for(k = 0; k < neuron.weights.length; k++) {

// Modify the weight by multiplying the weight by the

// learning rate and the input of the neuron preceding.

// If no preceding layer, then use the input layer.

neuron.deltas[k] = this.options.learningRate * neuron.gradient * (this._layers[i-1] ? this._layers[i-1]._neurons[k].input : input[k]);

neuron.weights[k] += neuron.deltas[k];

neuron.weights[k] += neuron.momentum * neuron.previousDeltas[k];

}

// Set previous delta values.

neuron.previousDeltas = neuron.deltas.slice();

}

}

The gradient property is defined as:

error = 0.0;

// So for every neuron in the following layer, get the

// weight corresponding to this neuron.

for(k = 0; k < this._layers[i+1]._neurons.length; k++) {

// And multiply it by that neuron's gradient

// and add it to the error calculation.

error += this._layers[i+1]._neurons[k].weights[j] * this._layers[i+1]._neurons[k].gradient;

}

// Once you have the error calculation, multiply it by

// the derivative of the activation function to get

// the gradient of this neuron.

neuron.gradient = output * (1 - output) * error;

My guess is that I am updating weights too fast or that I am updating them by multiplying them by the wrong values entirely. Comparing to the formulas I can find on the subject, I feel like I am following them pretty thoroughly, but I am obviously doing something very wrong.

When I use this training data, I get these results:

a.train([0,0], [0]);

a.train([0,1], [1]);

a.train([1,0], [1]);

console.log(a.input([0,0])); // [ 0.9960981505402279 ]

console.log(a.input([1,0])); // [ 0.9957925569461975 ]

console.log(a.input([0,1])); // [ 0.9964499429402672 ]

console.log(a.input([1,1])); // [ 0.996278252201647 ]

UPDATE: Here is a link to the fixed code: http://jsfiddle.net/adamthorpeg/aUF4c/3/ Note: Does not train until the error is tolerable for every input, so sometimes you still get inaccurate results once it reaches tolerable error for one ideal value. In order to train it fully, the training must continue until all errors are tolerable.

I found the answer to my problem. The answer is twofold:

First, the network was suffering from the problem of "catastrophic forgetting". I was training it on one ideal value/input pair at a time rather than cycling through each pair and training it one epoch at a time.

Second, in the line:

neuron.deltas[k] = this.options.learningRate * neuron.gradient * (this._layers[i-1] ? this._layers[i-1]._neurons[k].input : input[k]);

I was multiplying the learning rate and gradient (derivative calculation) by the previous neuron's input value, rather than the output value of the previous neuron, which would be the input to the weight calculation. Hence, the correct code for that line should have been:

neuron.deltas[k] = this.options.learningRate * neuron.gradient * (this._layers[i-1] ? this._layers[i-1]._neurons[k].output : input[k]);

This resource was invaluable: http://blog.zabarauskas.com/backpropagation-tutorial/

If you love us? You can donate to us via Paypal or buy me a coffee so we can maintain and grow! Thank you!

Donate Us With