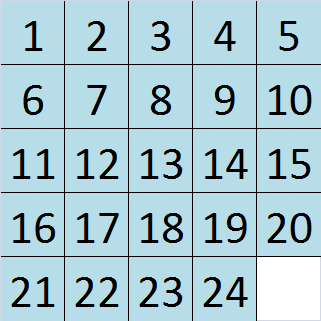

I am trying to find a way to programmatically solve a 24-piece sliding puzzle in a reasonable amount of time and moves. Here is an example of the solved state in the puzzle I am describing:

I have already found that the IDA* algorithm works fairly well to accomplish this for a 15-puzzle (4x4 grid). The IDA* algorithm is able to find the lowest number of moves for any 4x4 sliding puzzle in a very reasonable amount of time. I ran an adaptation of this code to test 4x4 sliding puzzles and was able to significantly reduce runtime further by using PyPy. Unfortunately, when this code is adapted for 5x5 sliding puzzles it runs horribly slow. I ran it for over an hour and eventually just gave up on seeing it finish, whereas it ran for only a few seconds on 4x4 grids. I understand this is because the number of nodes that need to searched goes up exponentially as the grid increases. However, I am not looking to find the optimal solution to a 5x5 sliding puzzle, only a solution that is close to optimal. For example, if the optimal solution for a given puzzle was 120 moves, then I would be satisfied with any solution that is under 150 moves and can be found in a few minutes.

Are there any specific algorithms that might accomplish this?

It as been proved that finding the fewest number of moves of n-Puzzle is NP-Complete, see Daniel Ratner and Manfred Warmuth, The (n2-1)-Puzzle and Related Relocation Problems, Journal of Symbolic Computation (1990) 10, 111-137.

Interesting facts reviewed in Graham Kendall, A Survey of NP-Complete Puzzles, 2008:

Therefore stopping the computation to change the methodology was the correct things to do.

It seems there is an available algorithm in polynomial time that can find sub-optimal solutions, see Ian Parberry, Solving the (n^2−1)-Puzzle with 8/3n^3 Expected Moves, Algorithms 2015, 8(3), 459-465. It may be what you are looking for.

IDA* works great up to a 4x4 puzzle, because that's 'just' 16! (20,922,789,888,000) possible states. A 5x5 puzzle has 25! (15,511,210,043,330,985,984,000,000) possible states, a factor of 740k million larger.

You need to switch strategies. The 'easiest' method solves the puzzle along the top row and then left column first, repeatedly, until you have a 3x3 puzzle, which can easily be solved using existing techniques.

Solving the puzzle involves 3 different phases you alternate between:

So phases 1 and 2 alternate until you can run phase 3; after solving the top 5 tiles (phase 1) you solve the left-most 4 tiles on the other rows (phase 2), then the top row of the remainder of the puzzle (4 tiles, phase 1), then the left column (3 tiles, phase 2), then solve phase 3. Phases 1 and 2 are basically identical, only the orientation differs, and for phase 2 the first tile is already in place.

Phases 1 and 2 are easily solved using lookup tables, no search required; you are moving specific tiles and don't care about anything else:

This doesn't give you the shortest path to a solution, but with no state search the problem is strictly bound and the worst case scenario known. Solving the first row and column of a 5x5 puzzle takes at most 427 moves this way, and 256 moves for the next row and column.

This algorithm was first described by Ian Parberry, in a paper titled A real-time algorithm for the (n2 − 1)-puzzle in 1995. I think that DSolving: a novel and efficient intelligent algorithm for large-scale sliding puzzles by GuiPing Wang and Ren Li describes a more efficient lookup-table method still, but as the paper isn't yet available for free I haven't studied it yet.

If you love us? You can donate to us via Paypal or buy me a coffee so we can maintain and grow! Thank you!

Donate Us With